UX process into agile

Lean helps you build the right thing. Agile helps you build the thing right.

My Role: Senior UX/UI Designer & Researcher.

Tools: Sketch, Invision, Hotjar, Abstract, Git, Visual Studio Code

From discovery phase to MVP

In October 2019, I took up a project to meet UX needs of our client, AXA partners, Barcelone. I began to collaborate closely with LAB team, and the Daimler project came to us.

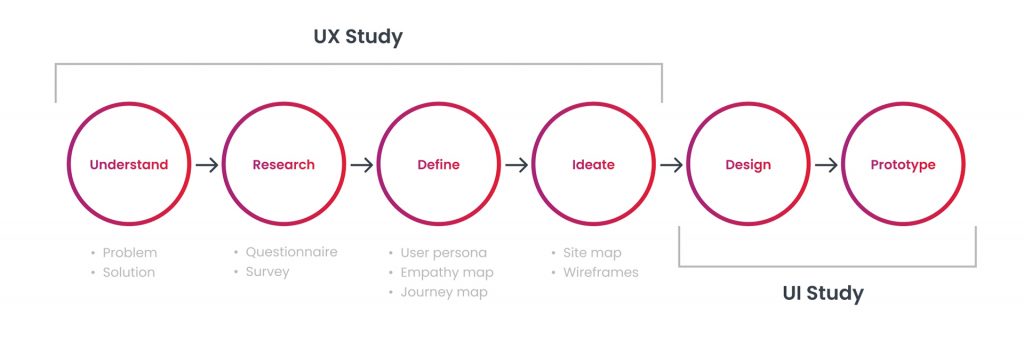

As a UX Designer and researcher, my role included several tasks as shown in the image below. Even if was not involved in the proper dev side, I was in a continue iteration with Dev team.

Description

Daimler

Daimler HUB is a web application based on React to manage Road Side Assistant incidents in 44 countries.